Let’s take a look at the gamma distribution:

$$ f(x) = \frac{1}{\Gamma(\alpha)\theta^{\alpha}}x^{\alpha-1}e^{-x/\theta}$$

I don’t know about you, but I think it looks pretty horrible. Typing it up in Latex is no fun and gave me second thoughts about writing this post, but I’ll plow ahead.

First, what does it mean? One interpretation of the gamma distribution is that it’s the theoretical distribution of waiting times until the \( \alpha\)-th change for a Poisson process. In another post I derived the exponential distribution, which is the distribution of times until the first change in a Poisson process. The gamma distribution models the waiting time until the 2nd, 3rd, 4th, 38th, etc, change in a Poisson process.

As we did with the exponential distribution, we derive it from the Poisson distribution. Let W be the random variable the represents waiting time. Its cumulative distribution function then would be

$$ F(w) = P(W \le w) = 1 -P(W > w)$$

But notice that \( P(W > w)\) is the probability of fewer than \( \alpha\) changes in the interval [0, w]. The probability of that in a Poisson process with mean \( \lambda w\) is

$$ = 1 – \sum_{k=0}^{\alpha-1}\frac{(\lambda w)^{k}e^{- \lambda w}}{k!}$$

To find the probability distribution function we take the derivative of \( F(w)\). But before we do that we can simplify matters a little by expanding the summation to two terms:

$$ = 1 – \frac{(\lambda w)^{0}e^{-\lambda w}}{0!}-\sum_{k=1}^{\alpha-1}\frac{(\lambda w)^{k}e^{- \lambda w}}{k!} = 1 – e^{-\lambda w}-\sum_{k=1}^{\alpha-1}\frac{(\lambda w)^{k}e^{- \lambda w}}{k!}$$

Why did I know to do that? Because my old statistics book did it that way. Moving on…

$$ F'(w) = 0 – e^{-\lambda w}(-\lambda)-\sum_{k=1}^{\alpha-1}\frac{k!(\lambda w)^{k}e^{- \lambda w}+e^{- \lambda w}k( \lambda w)^{k-1}\lambda}{(k!)^{2}}$$

After lots of simplifying…

$$ = \lambda e^{-\lambda w}+\lambda e^{-\lambda w}\sum_{k=1}^{\alpha-1}[\frac{(\lambda w)^{k}}{k!}-\frac{(\lambda w)^{k-1}}{(k-1)!}]$$

And we’re done! Technically we have the gamma probability distribution. But it’s a little too bloated for mathematical taste. And of course it doesn’t match the form of the gamma distribution I presented in the beginning, so we have some more simplifying to do. Let’s carry out the summation for a few terms and see what happens:

$$ \sum_{k=1}^{\alpha-1}[\frac{(\lambda w)^{k}}{k!}-\frac{(\lambda w)^{k-1}}{(k-1)!}] = $$

$$ [\lambda w – 1] + [\frac{(\lambda w)^{2}}{2!}-\lambda w]+[\frac{(\lambda w)^{3}}{3!} – \frac{(\lambda w)^{2}}{2!}] +[\frac{(\lambda w)^{4}}{4!} – \frac{(\lambda w)^{3}}{3!}] + \ldots$$

$$ + [\frac{(\lambda w)^{\alpha – 2}}{(\alpha – 2)!} – \frac{(\lambda w)^{\alpha – 3}}{(\alpha – 3)!}] + [\frac{(\lambda w)^{\alpha – 1}}{(\alpha – 1)!} – \frac{(\lambda w)^{\alpha – 2}}{(\alpha – 2)!}] $$

Notice that besides the -1 and 2nd to last term, everything cancels, so we’re left with

$$ = -1 + \frac{(\lambda w)^{\alpha -1}}{(\alpha -1)!}$$

Plugging that back into the gamma pdf gives us

$$ = \lambda e^{-\lambda w} + \lambda e^{-\lambda w}[-1 + \frac{(\lambda w)^{\alpha -1}}{(\alpha -1)!}]$$

This simplifies to

$$ =\frac{\lambda (\lambda w)^{\alpha -1}}{(\alpha -1)!}e^{-\lambda w}$$

Now that’s a lean formula, but still not like the one I showed at the beginning. To get the “classic” formula we do two things:

- Let \( \lambda = \frac{1}{\theta}\), just as we did with the exponential

- Use the fact that \( (\alpha -1)! = \Gamma(\alpha)\)

Doing that takes us to the end:

$$ = \frac{\frac{1}{\theta}(\frac{w}{\theta})^{\alpha-1}}{\Gamma(\alpha)}e^{-w/\theta} = \frac{1}{\theta}(\frac{1}{\theta})^{\alpha -1}w^{\alpha – 1}\frac{1}{\Gamma(\alpha)}e^{-w/\theta} = (\frac{1}{\theta})^{\alpha}\frac{1}{\Gamma(\alpha)}w^{\alpha -1}e^{w/\theta}$$

$$ = \frac{1}{\Gamma(\alpha)\theta^{\alpha}}w^{\alpha-1}e^{-w/\theta}$$

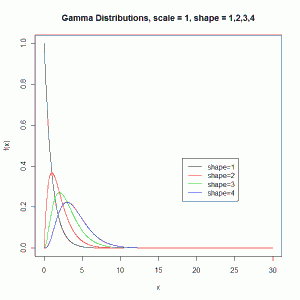

We call \( \alpha \) the shape parameter and \( \theta \) the scale parameter because of their effect on the shape and scale of the distribution. Holding \( \theta\) (scale) at a set value and trying different values of \( \alpha\) (shape) changes the shape of the distribution (at least when you go from \( \alpha =1\) to \( \alpha =2\):

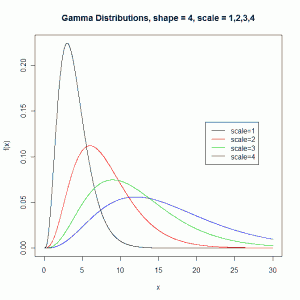

Holding \( \alpha\) (shape) at a set value and trying different values of \( \theta\) (scale) changes the scale of the distribution:

In the applied setting \( \theta\) (scale) is the mean wait time between events and \( \alpha\) is the number of events. If we look at the first figure above, we’re holding the wait time at 1 and changing the number of events. We see that the probability of waiting 5 minutes or longer increases as the number of events increases. This is intuitive as it would seem more likely to wait 5 minutes to observe 4 events than to wait 5 minutes to observe 1 event, assuming a one minute wait time between each event. The second figure holds the number of events at 4 and changes the wait time between events. We see that the probability of waiting 10 minutes or longer increases as the time between events increases. Again this is pretty intuitive as you would expect a higher probability of waiting more than 10 minutes to observe 4 events when there is mean wait time of 4 minutes between events versus a mean wait time of 1 minute.

Finally notice that if you set \( \alpha = 1\), the gamma distribution simplifies to the exponential distribution.

Update 5 Oct 2013: I want to point out that \( \alpha \) and \( \beta \) can take continuous values like 2.3, not just integers. So what I’ve really derived in this post is the relationship between the gamma and Poisson distributions.

First of all I would like to expres my great admiration for this wonderful derivation. However, I still have a problem. This derivation is perfect for alpha is a positive integer n. For this case the gamma distribution can be described as the sum of n independent exponentially distributed random variables each with the same exponential distribution. My question now is: how would you describe the gamma distribution for a continuous alpha, 0 < alpha?

You raise a good point and I realize now this post is kind of wrong. Of course alpha can take continuous values. I describe the gamma distribution as if it only applies to waiting times in a Poisson process. What I should have said is something like “the waiting time W until the alpha-th change in a Poisson process has a gamma distribution.” In other words I derived the relationship between the gamma and Poisson distributions and should have clearly stated that. I will update the post accordingly in a few moments.

To derive the distribution for continuous alpha, you start with the gamma function. Casella and Berger demonstrate this in their book, Statistical Inference, on page 99.

Hey, this post was really helpful, thanks. In it you mention your “old statistics book”. Could you let me know the name of this book?

Thanks-

Glad the post was helpful. The book I referred to is Probability and Statistical Inference, 7th ed., by Hogg and Tanis.

Your valuable posts enables my self-learning! Thank you.

Some issues with the presentation:

1. Your phrasing “The probability of that in a Poisson process with mean λw is” is a bit misleading because it comes after making the statement about P(W > w), yet the following probability shown is the one for F(w).

2. There’s an extra parenthesis when you first show the derivative.

3. The derivative is F'(w), not F'(x).

4. Some important steps were skipped, for instance it’s not clear where the extra factorials come from when you derive.

Overall, the proof is nice and that’s what I was looking for, but the bad presentation made me look for it elsewhere.

Thanks for the feedback.